DASF - Databricks AI Security Framework

AI Security: How Our Team Secures AI with DASF and Databricks Tools

Introduction

AI is transforming every industry - from healthcare and finance to retail and manufacturing - unlocking new innovations and efficiencies. However, organizations face a dual challenge: leveraging AI for competitive advantage while managing new security and privacy risks. Businesses must balance the gas of innovation and the brakes of governance and risk management when adopting AI.

In practice, this means traditional security practices must evolve to address AI-specific threats like data poisoning, model tampering, and inadvertent leaks of sensitive information. AI Security and Governance have become critical to establishing trust in AI initiatives. In fact, AI trust, risk, and security management was identified as a major strategic trend, and organizations that invest in AI transparency and security could see a 50% increase in AI adoption and user acceptance by 2026.

At TechFabric, we recognize that robust AI security is not just about protecting data - it's about safeguarding the entire AI lifecycle, from raw datasets to deployed machine learning models. We have invested in frameworks and tools that allow our team to combine traditional cybersecurity best practices with AI-specific controls, ensuring our clients can confidently deploy AI solutions in a secure, compliant, and reliable manner.

Challenges in Combining AI with Traditional Security

Deploying AI systems introduces unique security challenges that traditional IT security practices don't fully cover. Classic cybersecurity frameworks focus on securing infrastructure, applications, and data access, but AI brings new facets such as training data integrity, model behaviors, and ML-specific attack vectors. Organizations today grapple with exploiting AI's benefits while managing risks like data breaches, model misuse, and regulatory non-compliance.

Some of the key challenges include:

Massive and Diverse Data - AI thrives on big data, often aggregating sensitive information from multiple sources. Traditional data security must scale to ensure proper access controls, encryption, and monitoring for these large, varied datasets. If training data is compromised (e.g., poisoned or leaked), it can corrupt AI outcomes and violate privacy. This is why the foundation of any trustworthy ML system is secure, high-quality data, and any compromise at this stage has downstream consequences on all other aspects of AI systems and outputs.

Complex AI Supply Chain - Modern AI development relies on open-source libraries, pre-trained models, and third-party AI services. This introduces ML supply chain risks such as malicious code in libraries or backdoored models. Traditional security teams may not have visibility into these components. Without proper vetting and controls, an organization could inadvertently import vulnerabilities via an AI algorithm or a pretrained model. For example, a tainted machine learning library could introduce spyware, or an external Large Language Model API might expose sensitive prompts if not used carefully. These are novel risks beyond standard software supply chain concerns.

Dynamic Model Behavior and Threats - Unlike static software, AI models can evolve (or degrade) over time as data drifts. They are also susceptible to adversarial attacks (specially crafted inputs that trick models) and issues like model theft or unauthorized model alterations. Traditional security monitoring might not catch if a model's performance is degrading due to concept drift or if someone has swapped in an unapproved model version. The Databricks AI Security Framework highlights risks such as model drift, model theft, and malicious ML libraries that can impact AI system outputs. Mitigating these requires new practices like model validation, provenance tracking, and runtime monitoring for anomalies in predictions.

Regulatory Compliance and Ethical Use - AI deployments are increasingly subject to regulations (GDPR, HIPAA, upcoming AI-specific laws) and scrutiny around bias, fairness, and transparency. A major challenge is ensuring AI systems comply with these requirements. For instance, in finance, an AI model must be auditable and fair to meet regulatory approval; in healthcare, patient data used for AI must remain confidential and models must not expose private information. Traditional security frameworks alone don't address AI ethics or compliance in model outcomes. It's necessary to extend governance to cover things like data lineage (to prove where training data came from), model decision traceability, and content filtering for AI-generated outputs.

In summary, AI security spans multiple domains - it is simultaneously a data security issue, a software/application security issue, and a novel ML issue. The reality is it is all of the above. Recognizing this, our team follows a holistic approach: we integrate AI risk management into our security program at every stage of the AI development lifecycle. One key resource guiding us is the Data & AI Security Framework (DASF), which catalogs dozens of AI-specific risks and maps them to actionable controls.

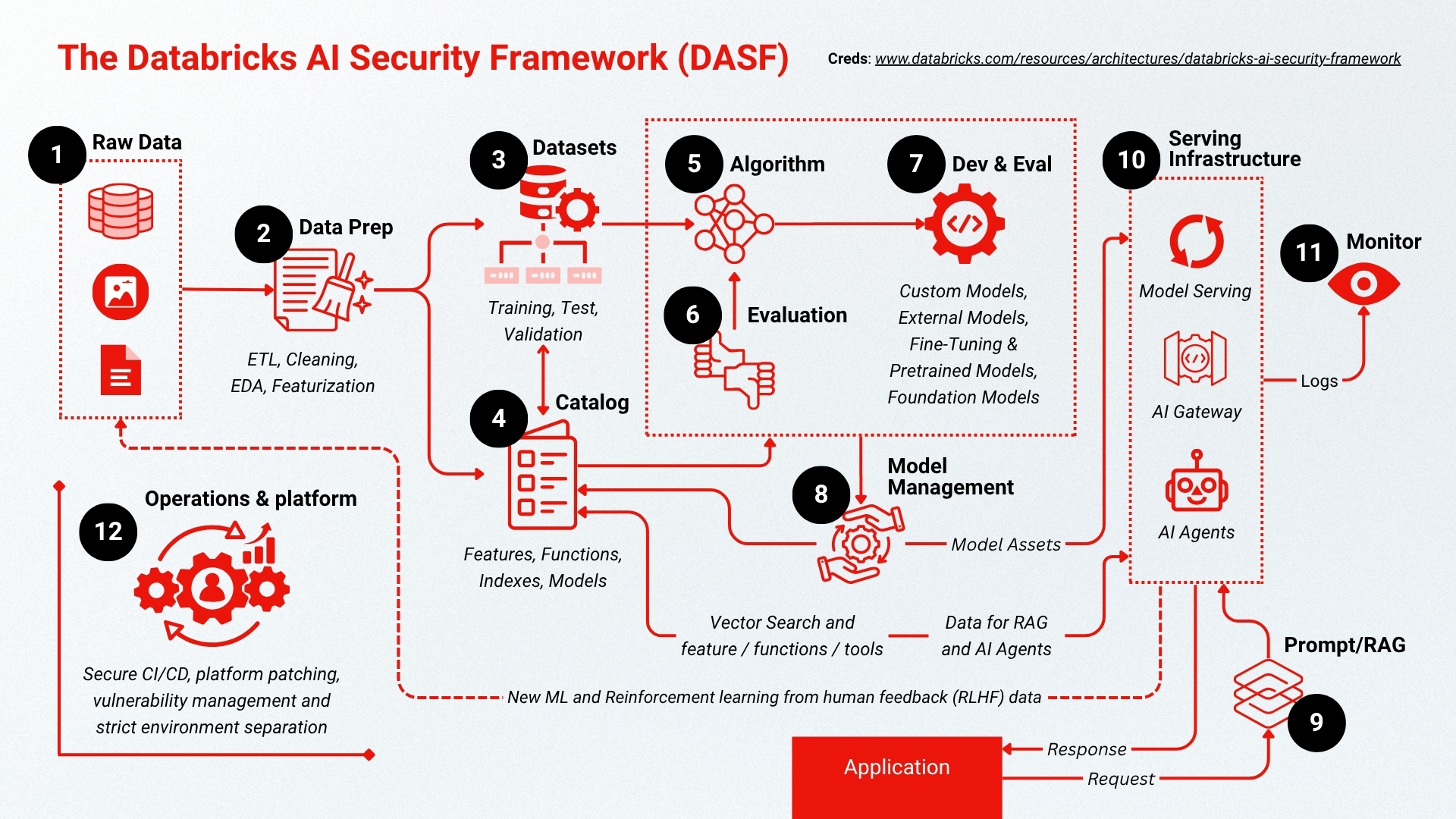

The Data & AI Security Framework (DASF) - A Holistic Approach

To tackle AI security systematically, our team uses the Data & AI Security Framework (DASF) as a foundation. The DASF is an actionable framework (initially developed by Databricks in collaboration with industry experts) that enumerates the 12 key components of a modern AI/ML system - from data ingestion and model development to deployment and platform infrastructure - and outlines the risks and best practices at each step.

Stage I: Data Operations (components 1-4)

This phase establishes the groundwork by managing the ingestion, preparation, and organization of data—critical steps for building reliable machine learning systems.

1. Raw Data - Collects inputs from various formats and sources, whether structured or unstructured. At this stage, data must be securely stored and protected with access policies.

2. Data Preparation - Involves cleaning data, conducting exploratory analysis, and transforming features as part of the ETL pipeline to create meaningful input for models.

3. Dataset Management - Ensures datasets are properly segmented for training, testing, and validation. All data should be version-controlled and reproducible.

4. Data Cataloging - Maintains a centralized registry for data assets such as features, indexes, models, and functions to facilitate discovery, governance, and secure collaboration

Risk Management Practices:

Encryption, access controls, audit logging, and data lineage tracking are essential to restrict data access and prevent unauthorized modifications.

Stage II: Model Operations (Components 5-8)

This stage covers how models are created, tested, and managed throughout their lifecycle

5. Algorithms - Refers to the core ML techniques used to build models, whether developed in-house or sourced externally.

6. Model Evaluation - Measures model performance using defined benchmarks and validation datasets.

7. Model Development - Encompasses creating models from scratch, using third-party APIs (e.g., OpenAI), refining large pre-trained models, or leveraging transfer learning.

8. Model Governance - Tracks versions, monitors performance, and maintains audit trails to ensure transparency, compliance, and repeatability.

Risk Management Practices:

Tools like experiment tracking, access restrictions, and detailed logging help safeguard the integrity of model development and changes.

Stage III: Deployment and Serving (components 9-10)

This stage is focused on the secure and reliable delivery of models into production environments.

9. Prompting and RAG (Retrieval-Augmented Generation) - Facilitates efficient, secure access to both structured and unstructured data in inference-based use cases.

10. Serving Infrastructure - Consists of APIs, model servers, and gateways that handle inference workloads and connect AI applications with deployed models.

Risk Management Practices:

Strategies like input sanitization, container isolation, traffic monitoring, and throttling help ensure resilience and reduce exposure to threats.

Stage IV: Platform and Operations (components 11-12)

This stage is responsible for ensuring platform integrity, managing environments, and maintaining system observability.

11. Monitoring - Collects logs and performance data to support ongoing model health checks, auditing, and operational visibility.

12. Operational Security - Covers CI/CD pipelines, patching routines, access policies, and strict segmentation between development, staging, and production systems.

Risk Management Practices:

Enforcing role-based access, applying security patches promptly, and isolating environments are key to maintaining a secure operational framework across the AI system.

The framework effectively bridges the gap between traditional IT security and AI/ML workflows. It provides a common language so that data scientists, IT, and security teams can work together on securing AI solutions.

What DASF provides: It maps 62 technical security risks to 64 recommended controls (as of beginning of 2025) spanning the AI lifecycle. These controls cover everything from ensuring data quality and access control, to monitoring model performance and protecting the underlying platform. The DASF builds on established standards (like NIST, ISO, MITRE ATLAS, and OWASP's guidelines for ML/LLMs) to deliver a defense-in-depth approach for AI security. In other words, it layers multiple security measures so that if one defense fails, others are in place - much like traditional cybersecurity, but tailored to AI's unique aspects. Crucially, DASF is actionable: it not only identifies risks, but also suggests concrete mitigations for each. For example, if data poisoning is a risk, the framework recommends controls like strict data validation, provenance tracking, and access restrictions on training data. If model theft is a risk, it suggests encryption of model artifacts and role-based access controls on the model registry. This actionable guidance means our team can quickly translate the framework into real policies and tool configurations in our environment. In fact, DASF 2.0 even provides direct mapping to platform-specific controls - for instance, if using Databricks, it points to built-in features or settings that implement each control.

By using DASF, our company ensures no aspect of AI security is overlooked. It forces us to consider security and governance at every phase: data ops, model development, deployment, and ongoing operations.

Let us explain some of the key DASF components.

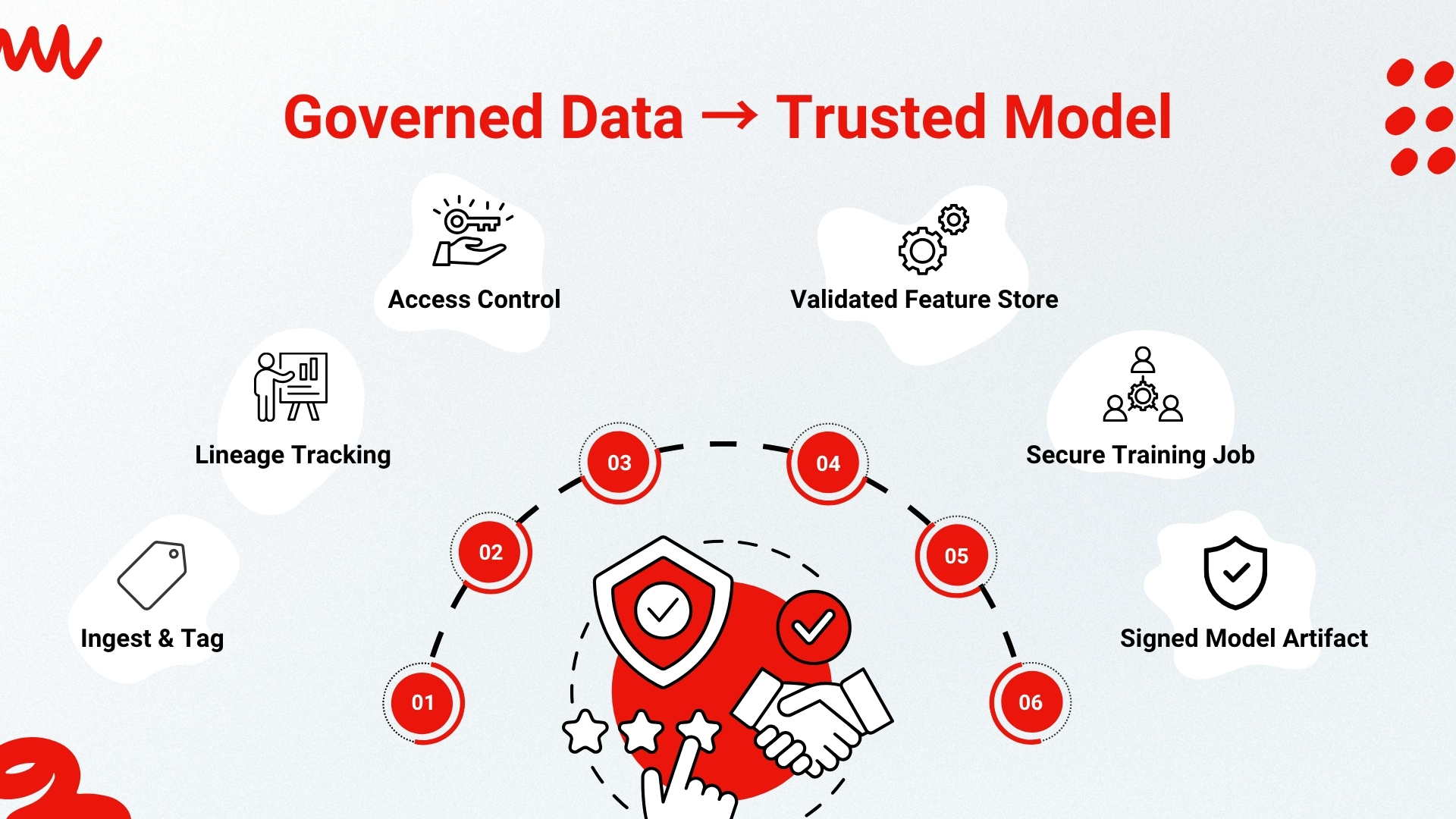

Catalog: Securing Data and Feature Assets

In any AI system, data is the bedrock. The DASF emphasizes that raw data ingestion, preparation, and cataloging form the foundation of a trustworthy model. At this stage, our focus is on data security and governance. Key challenges include controlling access to sensitive data, ensuring data quality (since corrupted or biased data can lead to insecure outcomes), and tracking lineage (knowing where data came from and how it's used).What is the Catalog? In DASF terms, the Catalog is a centralized registry for all data and AI assets - not just datasets, but also features, models, and functions. It supports discoverability and lineage tracking, and crucially, it enables governance and secure sharing of data across teams. In practice, this is where we implement policies about who can access which data, and we record metadata about data sources, preprocessing, and usage. Proper cataloging prevents the common pitfall of "shadow AI" projects using unvetted data. It ensures that only authorized, high-quality data flows into model training.

At TechFabric, we use Databricks Unity Catalog as a key tool to secure this component. Unity Catalog provides a unified governance layer for data and AI assets, allowing us to enforce consistent access controls, compliance policies, and monitoring across all data, notebooks, and models in our platform. With Unity Catalog, we can define fine-grained permissions (down to rows, columns, or features), automatically track data lineage end-to-end, and apply data classification tags (like PII) to trigger appropriate controls.

For example, if a dataset contains personal identifiable information, we tag it and ensure only specific roles can use it for model training, and even then the data might be masked or anonymized. Unity Catalog's built-in intelligent governance simplifies compliance by providing a single place to manage discovery, access, and auditing across our entire data estate. This significantly reduces risk and simplifies audits for us and our clients - we can readily demonstrate which data was used by which model, and that proper controls were in place. In securing the Catalog, we implement controls such as strong identity and access management (IAM), encryption of data at rest and in transit, and rigorous audit logging.

According to the DASF, access controls, encryption, data lineage tracking and audit logging are fundamental mitigations at this layer. Our team configures these controls so that every data access is accounted for. We also validate data upon ingestion to detect anomalies or possible poisoning (e.g., sudden unexpected changes in data distributions). By governing data from the outset, we prevent many issues from ever propagating into the AI models. In sectors like healthcare and finance, this also helps meet regulatory requirements - e.g. ensuring only de-identified data is used for model training, or maintaining an audit trail for compliance reporting.

See more about Unity Catalog in our previous article: Beyond Basic Data Catalogs: How Unity Catalog Solves Real Enterprise Governance Challenges

Algorithm: Securing ML Code and Libraries

Securing the algorithms and code behind AI models is vital. Beyond traditional software security, AI-specific risks like compromised libraries or manipulated algorithms introduce unique challenges. Our team addresses these threats by thoroughly reviewing all ML code and dependencies, restricting unvetted packages, and ensuring provenance through secure artifact repositories. We track every model experiment with Databricks and MLflow and Azure AI Foundry, maintaining strict version control and audit capabilities to detect any unauthorized changes. We also educate our team on secure AI coding practices, sandbox training environments, and continuously monitor resource usage to prevent malicious activities.

Evaluation: Ensuring Robust and Secure Models

Rigorous model evaluation is crucial before deployment. Our evaluation process extends beyond accuracy, incorporating adversarial testing, bias detection, and ethical compliance checks to ensure models aren't vulnerable to exploitation. Especially for generative AI, we proactively test for prompt injection vulnerabilities and other crucial metrics. Additionally, we protect evaluation datasets against tampering, ensuring reproducible, trusted evaluation results. Only models passing comprehensive security, ethical, and performance evaluations advance, significantly reducing deployment risks and fostering client confidence across domains like healthcare and finance.

Model Management: Governance Throughout the Lifecycle

Effective Model Management ensures secure governance throughout a model's lifecycle—from deployment to retirement. Our centralized Model Registry, powered by Databricks Model Registry (MLflow) or Azure AI Foundry, provides rigorous access controls, versioning, and comprehensive audit trails. Role-based permissions and enforced model approval workflows safeguard against unauthorized changes or deployments. Encryption and signing protect model integrity, while detailed audit logs ensure traceability and compliance with regulations. By meticulously managing models, we deliver secure, transparent, and trustworthy AI solutions that clients across regulated industries rely upon.

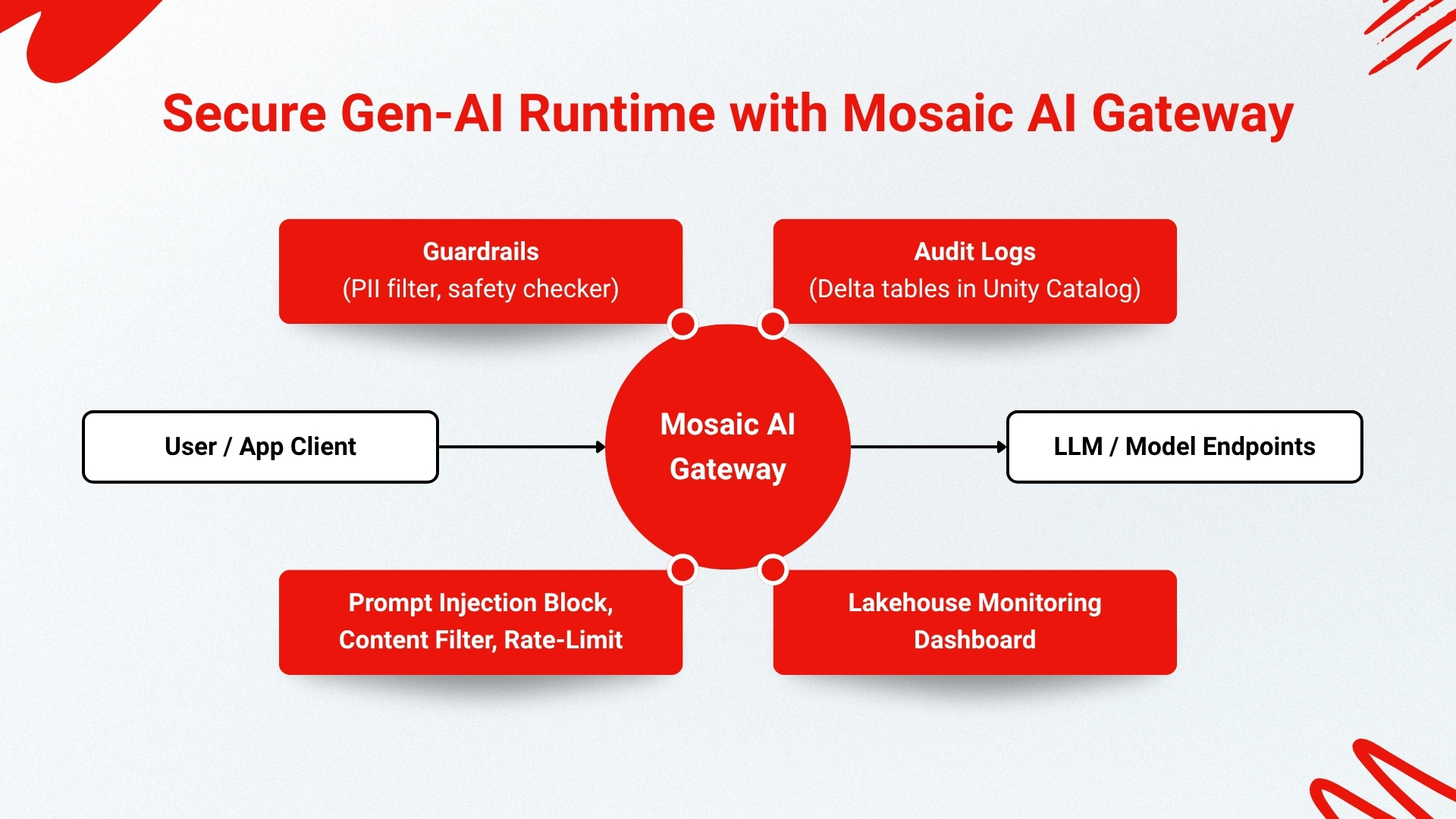

Operations: Secure and Continuous AI Monitoring

Operational security (MLOps) ensures AI solutions remain secure and reliable post-deployment. Our team continuously monitors model performance, accuracy drift, and security anomalies, quickly identifying potential threats or malicious activities. Utilizing Databricks Lakehouse Monitoring, we capture telemetry for real-time alerting. Databricks Mosaic AI Gateway enhances protection for generative AI applications, applying centralized guardrails, filtering sensitive content, and logging activities for compliance audits. Our strict CI/CD practices, robust vulnerability management, environment isolation, and AI-specific incident response plans guarantee that our operational environment remains secure, resilient, and trustworthy for our clients.

Platform: Securing the Underlying AI Platform

The Platform component refers to the foundational technology environment on which data and AI workloads run. This includes cloud infrastructure (or on-premise servers), data pipelines, databases, and the overall Data & AI platform software (like Databricks) that orchestrates everything.

Securing the platform is non-negotiable - if the underlying platform is breached, it could undermine all higher-level controls. Thus, we treat our AI platform with the same rigor as traditional IT systems: hardening, monitoring, and aligning to security benchmarks.

We leverage the Databricks Lakehouse Platform (but not limited to it) as our core environment because it comes with robust security features out-of-the-box (identity federation, encryption, network isolation options, etc.) and is designed to meet industry compliance standards. For instance, Databricks allows us to deploy in a customer's cloud account with a secure cluster setup (no public IPs if not needed, secure credential passthrough, etc.), ensuring data stays within approved boundaries. We configure strict role-based access controls (RBAC) at the workspace and cluster levels, so users only access the data and compute they are permitted. The platform is also configured to use single sign-on and MFA for user access, adding layers of authentication security.

In line with DASF recommendations, our platform security efforts focus on areas like vulnerability management, standardization, and compliance. We maintain images and templates for AI compute environments that are pre-hardened (e.g., unnecessary services disabled, only approved packages included). We regularly scan these environments for vulnerabilities and apply patches. Lack of such hygiene is a risk DASF calls out - e.g., poor vulnerability management or lack of repeatable standards can leave holes for attackers. By enforcing consistent build standards for our AI platform, we close that door.

Another key aspect is environment separation. We ensure that development, testing, and production environments are clearly separated and access between them is tightly controlled. This prevents, for example, a less secure dev environment from being a jumping point into production. It also aligns with compliance needs - for instance, data used in development might be synthetic or masked, whereas production handles real customer data with stricter controls. DASF explicitly notes that environment isolation and strict separation of dev/staging/prod is vital to platform security, and we enforce exactly that.

By request, we conduct User Acceptance Testing (UAT) sessions with select customers, enabling them and their users to directly experience the product's quality.

The Platform is also where Unity Catalog and Mosaic AI Gateway (discussed earlier) operate, tying together governance and security across the stack. By capturing all metadata and logs in the platform, we gain a bird's-eye view of our AI estate. Unity Catalog, as mentioned, gives us unified governance, and Mosaic AI Gateway gives us a centralized handle on all AI service interactions. These tools improve not only security but also compliance and governance for our generative AI applications, because they allow us to prove control over data and model usage.

For example, if a client in the public sector needs to ensure no citizen data is used by an AI model without authorization, we can demonstrate that through our platform logs and catalog lineage. In essence, securing the AI platform is about creating a strong, secure foundation so that all the higher-level AI work can be trusted. Our company's platform security mirrors the best practices of enterprise IT security (from network firewalls to endpoint protection on developer workstations) combined with AI-specific measures. The result is an environment where data engineers and data scientists can innovate freely, while our security engineers quietly ensure that compliance and protection are continuously enforced in the background.

It's a partnership across our team at TechFabric - much like DASF itself fosters a partnership between data/AI teams and security teams.

Conclusion

AI has the potential to revolutionize businesses across every domain, but achieving this requires a relentless focus on security, governance, and trust. By anchoring our approach in the Data & AI Security Framework (DASF), we systematically address risks throughout the AI lifecycle - from data catalogs and algorithms to model management and platform security - ensuring our AI models deliver value safely.

Our strategy leverages advanced tools like Databricks Unity Catalog for unified governance and the Mosaic AI Gateway for robust security and real-time guardrails. This provides clients with confidence in their AI initiatives, proactively addressing compliance and reducing risk exposure. In practical terms, banks can safely deploy AI-driven services with auditable decisions; healthcare providers can benefit from AI while maintaining HIPAA compliance; and retailers can personalize experiences without compromising data privacy or ethics.

TechFabric has successfully implemented these practices across multiple industries, always tailoring solutions without compromising core principles. We firmly believe you don't have to sacrifice security for innovation - you can have both. By embedding security into every AI project, our approach helps clients accelerate AI adoption securely. Reach out to our team to explore how we can help you build innovative, compliant, and trustworthy AI solutions, unlocking AI's full potential with peace of mind.